Deeper Dive: Overriding Work Pool Job Variables¶

As described in the Deploying Flows to Work Pools and Workers guide, there are two ways to deploy flows to work pools: using a prefect.yaml file or using the .deploy() method.

In both cases, you can override job variables on a work pool for a given deployment.

While exactly which job variables are available to be overridden depends on the type of work pool you're using at a given time, this guide will explore some common patterns for overriding job variables in both deployment methods.

Background¶

First of all, what are "job variables"?

Job variables are infrastructure related values that are configurable on a work pool, which may be relevant to how your flow run executes on your infrastructure. Job variables can be overridden on a per-deployment or per-flow run basis, allowing you to dynamically change infrastructure from the work pools defaults depending on your needs.

Let's use env - the only job variable that is configurable for all work pool types - as an example.

When you create or edit a work pool, you can specify a set of environment variables that will be set in the runtime environment of the flow run.

For example, you might want a certain deployment to have the following environment variables available:

{

"EXECUTION_ENV": "staging",

"MY_NOT_SO_SECRET_CONFIG": "plumbus",

}

Rather than hardcoding these values into your work pool in the UI and making them available to all deployments associated with that work pool, you can override these values on a per-deployment basis.

Let's look at how to do that.

How to override job variables on a deployment¶

Say we have the following repo structure:

» tree

.

├── README.md

├── requirements.txt

├── demo_project

│ ├── daily_flow.py

... and we have some demo_flow.py file like this:

import os

from prefect import flow, task

@task

def do_something_important(not_so_secret_value: str) -> None:

print(f"Doing something important with {not_so_secret_value}!")

@flow(log_prints=True)

def some_work():

environment = os.environ.get("EXECUTION_ENVIRONMENT", "local")

print(f"Coming to you live from {environment}!")

not_so_secret_value = os.environ.get("MY_NOT_SO_SECRET_CONFIG")

if not_so_secret_value is None:

raise ValueError("You forgot to set MY_NOT_SO_SECRET_CONFIG!")

do_something_important(not_so_secret_value)

Using a prefect.yaml file¶

In this case, let's also say we have the following deployment definition in a prefect.yaml file at the root of our repository:

deployments:

- name: demo-deployment

entrypoint: demo_project/demo_flow.py:some_work

work_pool:

name: local

schedule: null

Note

While not the focus of this guide, note that this deployment definition uses a default "global" pull step, because one is not explicitly defined on the deployment. For reference, here's what that would look like at the top of the prefect.yaml file:

pull:

- prefect.deployments.steps.git_clone: &clone_repo

repository: https://github.com/some-user/prefect-monorepo

branch: main

Hard-coded job variables¶

To provide the EXECUTION_ENVIRONMENT and MY_NOT_SO_SECRET_CONFIG environment variables to this deployment, we can add a job_variables section to our deployment definition in the prefect.yaml file:

deployments:

- name: demo-deployment

entrypoint: demo_project/demo_flow.py:some_work

work_pool:

name: local

job_variables:

env:

EXECUTION_ENVIRONMENT: staging

MY_NOT_SO_SECRET_CONFIG: plumbus

schedule: null

... and then run prefect deploy -n demo-deployment to deploy the flow with these job variables.

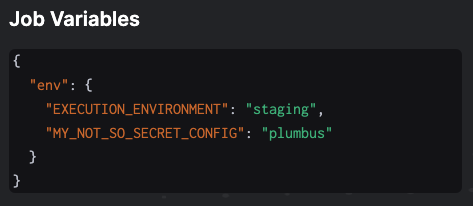

We should then be able to see the job variables in the Configuration tab of the deployment in the UI:

Using existing environment variables¶

If you want to use environment variables that are already set in your local environment, you can template these in the prefect.yaml file using the {{ $ENV_VAR_NAME }} syntax:

deployments:

- name: demo-deployment

entrypoint: demo_project/demo_flow.py:some_work

work_pool:

name: local

job_variables:

env:

EXECUTION_ENVIRONMENT: "{{ $EXECUTION_ENVIRONMENT }}"

MY_NOT_SO_SECRET_CONFIG: "{{ $MY_NOT_SO_SECRET_CONFIG }}"

schedule: null

Note

This assumes that the machine where prefect deploy is run would have these environment variables set.

export EXECUTION_ENVIRONMENT=staging

export MY_NOT_SO_SECRET_CONFIG=plumbus

As before, run prefect deploy -n demo-deployment to deploy the flow with these job variables, and you should see them in the UI under the Configuration tab.

Using the .deploy() method¶

If you're using the .deploy() method to deploy your flow, the process is similar, but instead of having your prefect.yaml file define the job variables, you can pass them as a dictionary to the job_variables argument of the .deploy() method.

We could add the following block to our demo_project/daily_flow.py file from the setup section:

if __name__ == "__main__":

flow.from_source(

source="https://github.com/zzstoatzz/prefect-monorepo.git",

entrypoint="src/demo_project/demo_flow.py:some_work"

).deploy(

name="demo-deployment",

work_pool_name="local", # can only .deploy() to a local work pool in prefect>=2.15.1

job_variables={

"env": {

"EXECUTION_ENVIRONMENT": os.environ.get("EXECUTION_ENVIRONMENT", "local"),

"MY_NOT_SO_SECRET_CONFIG": os.environ.get("MY_NOT_SO_SECRET_CONFIG")

}

}

)

Note

The above example works assuming a couple things: - the machine where this script is run would have these environment variables set.

export EXECUTION_ENVIRONMENT=staging

export MY_NOT_SO_SECRET_CONFIG=plumbus

demo_project/daily_flow.pyalready exists in the repository at the specified path

Running this script with something like:

python demo_project/daily_flow.py

... will deploy the flow with the specified job variables, which should then be visible in the UI under the Configuration tab.

How to override job variables on a flow run¶

When running flows, you can pass in job variables that override any values set on the work pool or deployment. Any interface that runs deployments can accept job variables.

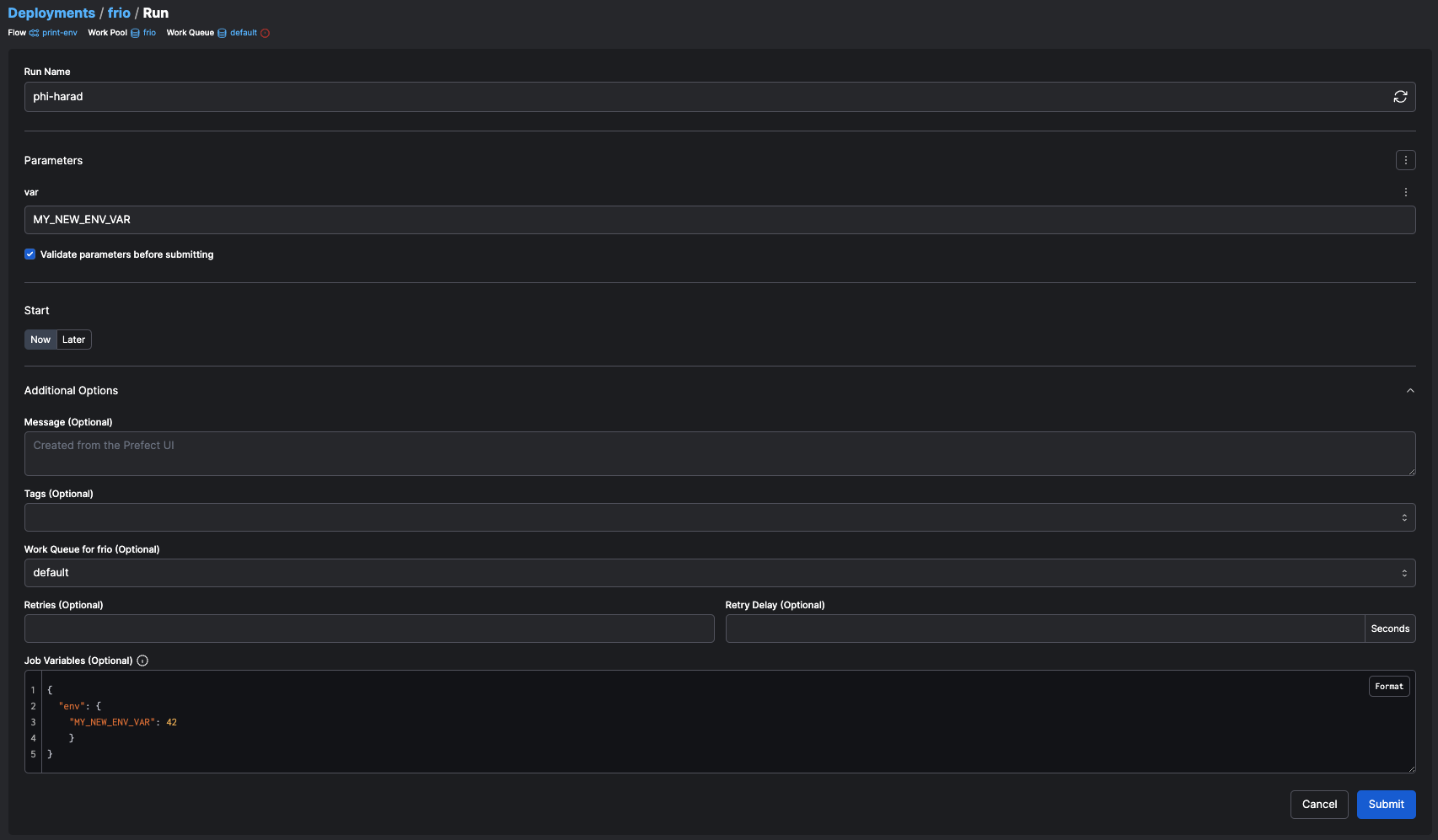

Using the custom run form in the UI¶

Custom runs allow you to pass in a dictionary of variables into your flow run infrastructure. Using the same env example from above, we could do the following:

Using the CLI¶

Similarly, runs kicked off via CLI accept job variables with the -jv or --job-variable flag.

prefect deployment run \

--id "fb8e3073-c449-474b-b993-851fe5e80e53" \

--job-variable MY_NEW_ENV_VAR=42 \

--job-variable HELLO=THERE

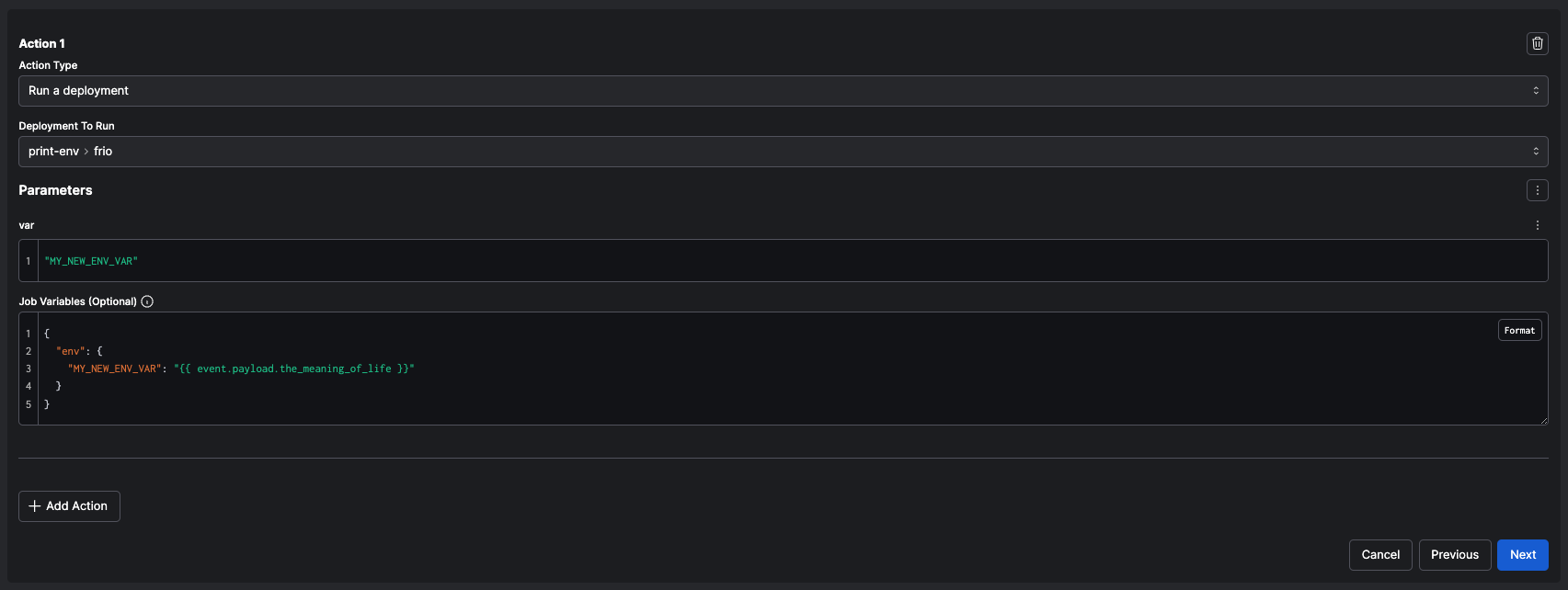

Using job variables in automations¶

Additionally, runs kicked off via automation actions can use job variables, including ones rendered from Jinja templates.